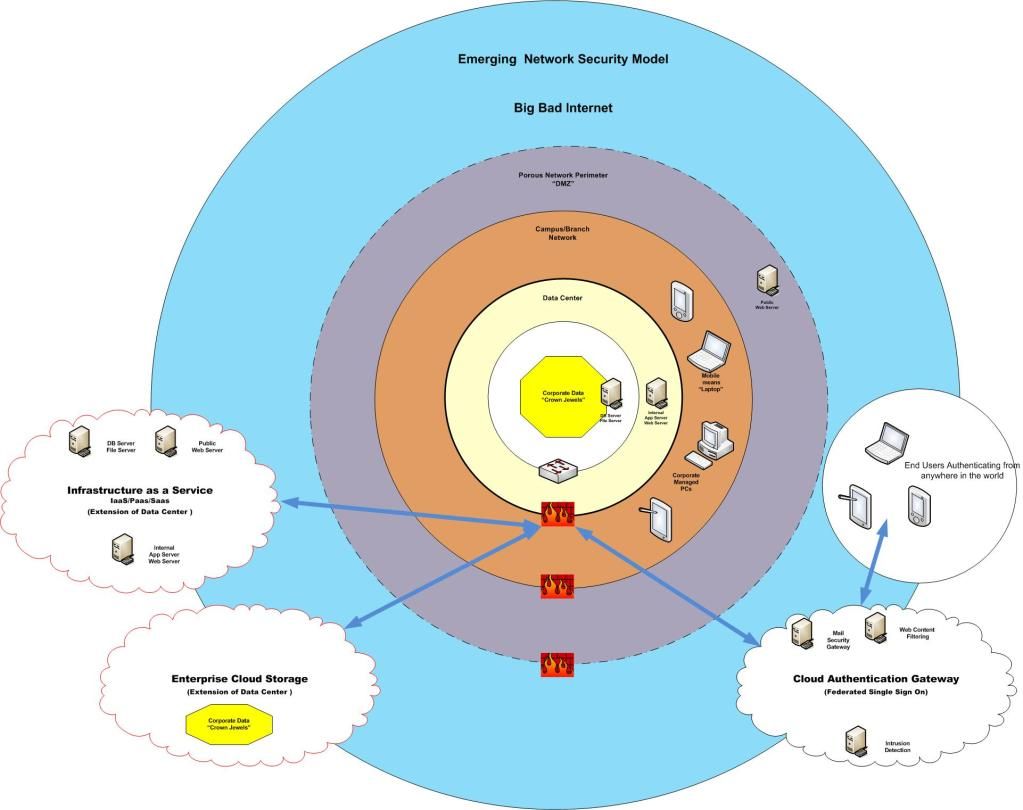

Even in complex environments, we have had the comfort of knowing where our network perimeter connected to the public Internet, and could apply stringent controls to secure that traffic.

Mail filters, URL filters, Intrusion Prevention... All of your communications with the Internet, channel through your firewall like good little cattle...

I miss those days....

Today, and ever more relevant in the near future, those "perimeters" are becoming more and more porous. Virtualization has taken the data center by storm, and Lines of Business are realizing the value in placing their new application or service with a "Cloud Provider". All manner of valid reasons are mentioned, from "Business Continuity" and "Reducing Capital Expenditures", to "Time to Market".

Traditional data center services are now comoditized on the public Internet. Getting a Virtual Web or Database Server up and running these days, in either Windows or Linux variants is a mere matter of minutes. Email and File Transfer Services are but a click away. Storage... Gigs of storage... are FREE for the taking!

Regardless of the reason, your data center is expanding beyond your bricks and mortar controls. Many call this the Shrinking Perimeter. (Here, and Here, and Here,) Firewalls at the edge of your network are no longer adequate, and provide for a false sense of comfort.

To add Insult to Injury, Your end users are getting restless. They no longer want to wait for your technology refresh cycles to bring them the newest, last year's PC. They want access to their applications and information from the latest technology on the Planet, and will fund that purchase themseleves just for the privilege.

BYOD is here already, in one form or another. Gauranteed that your Executives are already using the latest iPad, iPhones, Samsung Galaxy, or Blackberry Playbook. Go ahead! Tell me I'm wrong! Even if you have not made provisions for them to connect, they are in your offices, in your campuses, in your Data centers.

Please forgive me, but I will get into the complexities of Mobile Management (Mobile Device Management , vs Mobile Application Management , vs Mobile Data Management) in another article...

Where's there's a Mobile Hotspot there's a way....

I'm just saying...

In the very near future, if it hasn't happed to you already, you will have to manage end users connecting from a multitude of diverse devices, from anywhere they are in the world, to your corporate applications..... Which of course also reside anywhere in the world.Your role in all of this, even in the face of security, is to be an enabler. Stand in their way, and they will surely find the path around you.

Don't take my word for it. Go talk to your lines of business. I'm sure they have already engaged the likes of Salesforce, Amazon, Workday, Box, IBM Cloud Storage. Validate this in your URL filter logs... They are going there already.

So.... Give them what they want, in a way that allows you to maintain your controls.

Lock down access to your servers and data on the system itself! Keep your Data Security as close to the data as possible.

Then, entertain the ability to stretch outside of the concrete that houses your data center. Make external Cloud providers a part of your roadmap, a part of your delivery model where the appropriate controls can be maintained, regardless of geography.

Negotiate a Master Services Agreement with a reputable Cloud Storage Provider, such that YOU maintain the encryption keys, and can facilitate user provisioning / deprovisioning yourself. Make sure that the provider can comply with your Corporate regulatory requirements, such as scheduled security assessments/audits.

Make sure that your Cloud providers can feed appropriate logging and alerting to your Security Operations Center!

Likewise, find a suitable Provider for Infrastructure, Platform, and Software services, and negotiate an Enterprise Relationship with them. Then provide these options, withing your controls framework, to your users.

Simply provide for the path of least resistance, while embedding all of the controls required to manage these providers.